Evaluation

Program evaluation takes time and planning, but it is worth the effort because it tells you if the intervention was carried out as intended and has had the impact you desire. This Section highlights key evaluation concepts and directs you to a reliable evaluation framework and information developed for public health programs by the Centers for Disease Control and Prevention (CDC). Following these tried-and-true methods will help you document your success and provide feedback for improving your program.

This Section includes guidance on evaluation of a health intervention or program.

Program evaluation is an essential public health service. Reasons to evaluate a public health program include1:

- Monitor progress toward the program’s goals

- Determine whether program components are producing the desired progress on outcomes

- Permit comparisons among groups, particularly among populations with disproportionately high risk factors and adverse health outcomes

- Justify the need for further funding and support

- Find opportunities for continuous quality improvement

- Ensure that effective programs are maintained, and resources are not wasted on ineffective programs

CDC has developed a framework for program evaluation in public health that includes the following steps:

- Engage stakeholders

- Describe the program

- Focus on the evaluation design

- Gather credible evidence

- Justify conclusions

- Ensure use of conclusions and share lessons learned

The framework also includes four sets of standards for conducting good evaluations of public health programs: utility, feasibility, propriety, and accuracy.

RESOURCES AVAILABLE:

- 6.1a Framework for Program Evaluation in Public Health

- 6.1b Detailed Description of CDC’s Program Evaluation Framework and Other Program Evaluation Resources

Z-CAN Example: Overview of Z-CAN Monitoring and Evaluation Plan

The Z-CAN monitoring and evaluation plan aimed to assess implementation and associated outcomes of the Z-CAN program. The specific objectives were to assess:

- Perceived facilitators and barriers to accessing reversible contraception in Puerto Rico among both Z-CAN and non-Z-CAN clients

- Z-CAN physician and clinic staff perceptions of program elements that worked and did not work well to inform potential replication or adaptation in other future emergency response efforts

- Contraceptive use patterns, unintended pregnancy, and client satisfaction among Z-CAN clients at various times after receipt of program services

- Unmet need for services among Z-CAN clients after the program end date

A mixed-methods approach (includes qualitative and quantitative data) was used to gather data. The approach included focus groups with women enrolled in Z-CAN and with women not enrolled, stratified by age (18–25 and 25 years and up); semi-structured individual interviews with Z-CAN physicians and clinic staff; and online surveys with Z-CAN physicians, clinic staff, and women who have received services through Z-CAN.

RESOURCES AVAILABLE:

- 6.1c Focus Group Discussion Guide for Women Enrolled in Z-CAN Program

- 6.1d Focus Group Discussion Guide for Women Not Enrolled in Program

- 6.1e Interview Guide for Physicians in Z-CAN

- 6.1f Interview Guide for Staff in Z-CAN

- 6.1g Online Survey for Physicians in Z-CAN

- 6.1h Online Survey for Z-CAN Patients (6-and 12- month Follow-up)

Lessons Learned: Evaluation Planning

- Think about program evaluation from the very beginning.

- Engage a broad group of stakeholders with varying perspectives.

- Consider both process/implementation and outcome/effectiveness measures.

- Identify strategies to incorporate interim evaluation findings to improve program implementation.

- Allow extra time for translation and translation verification when your program has developed documents such as data-collection instruments for participants in one language and then used the documents in another language.

Z-CAN Example: Using Interim Evaluation Findings

Z-CAN program staff conducted focus groups over several months. Rather than waiting until final data were collected and analyzed, research staff reported weekly on key themes that emerged related to how women learned about the Z-CAN program. For example, women not enrolled in Z-CAN reported hearing about the program, but not seeking services because it sounded “too good to be true.” The program tweaked communication strategies to address this misperception, which resulted in improved program implementation.

1From Introduction to Program Evaluation for Public Health Programs: A Self-Study Guide https://www.cdc.gov/eval/guide/introduction/index.htm

Overview of Process Evaluation

Before you assess program outcomes and goals, you must first carry out a process evaluation. This key component of evaluation measures the extent of program implementation and fidelity to the proposed program model (whether your program was carried out as intended).

You can use timely data collection and analysis within a process evaluation to identify barriers to or needs for program implementation and or develop plans to correct or improve program implementation so that it conforms closely to the program model.

Z-CAN Example: Process Evaluation for Monitoring Program Implementation

We developed and implemented a patient satisfaction survey to learn about women’s experiences during their initial Z-CAN visit. This was a way to understand if women were satisfied with their counseling session, services, and the method they received, and if key Z-CAN program activities were delivered as intended.

RESOURCE AVAILABLE:

Respondents reported on receipt of:

- High-quality, client-centered counseling

- Contraceptive method of choice on the same day of initial visit

- Free Z-CAN services and contraceptive method

- Information about free removals of various long-acting reversible contraception methods

The primary purpose of this data collection was to:

- Identify additional training needs for physicians and contraception counseling staff

- Assure receipt of high-quality, client-centered contraceptive counseling

- Determine fidelity to key programmatic requirements (women who participate in Z-CAN receive a contraceptive method of their choice at no cost, on the same day as their Z-CAN appointment).

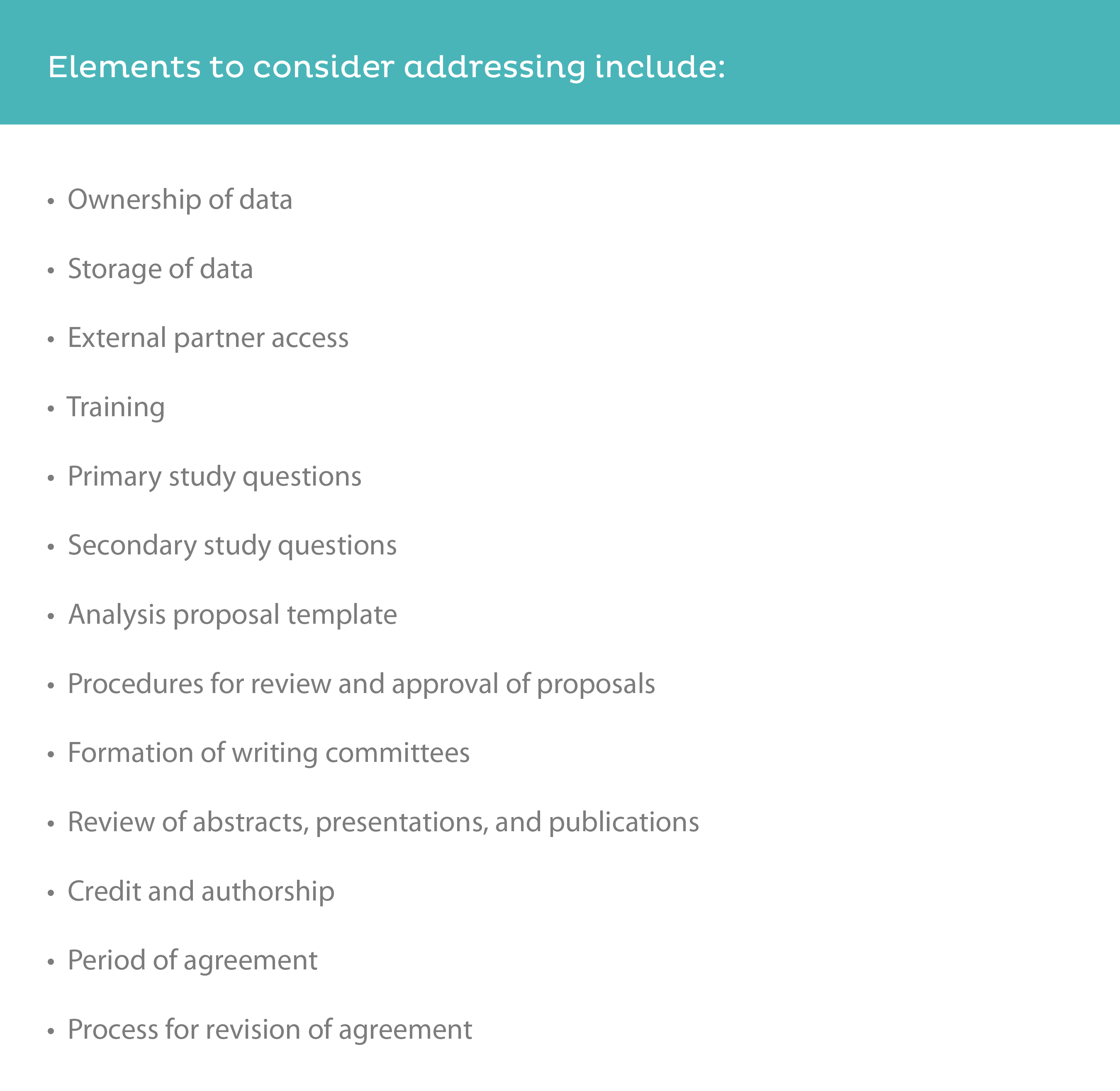

When more than one agency or organization is involved, it is wise to establish data-sharing and publication policy agreements between entities to outline conditions and expectations clearly.

Elements to consider addressing include:

- Ownership of data

- Storage of data

- External partner access

- Training

- Primary study questions

- Secondary study questions

- Analysis proposal template

- Procedures for review and approval of proposals

- Formation of writing committees

- Review of abstracts, presentations, and publications

- Credit and authorship

- Period of agreement

- Process for revision of agreement